Introduction

Dit is de centrale locatie voor alle informatie over de website en de .ComCom.

Wil je iets weten over het gebruik van de website? Klik hier.

Wil je een suggestie doen voor de website of iets vragen aan de .ComCom (bijvoorbeeld dat we een stukje content aanpassen)? Klik hier.

Wil je iets weten over hoe de .ComCom werkt, of over wat je kunt leren bij de .ComCom? Klik hier.

The rest will be dedicated to explaining technical details, the choices we made, how to setup a development environment, how to deploy to production (and what that even means). We use English for the sake of future compatibility and so that it is easy to share anything written here online (and because many terms don’t have Dutch equivalents).

Changelog

Hier houden we alle veranderingen bij. Dit gaat specifiek om code, niet om content. De frontend wordt vaker geüpdate dan hier weergegeven (en heeft ook geen specifieke versies), maar de belangrijke veranderingen zijn wel hier samengevat.

- Frontend (0232c32) - 2024-04-05

- 2.2.1 - 2024-01-10

- 2.2.0 - 2024-01-10

- 2.1.1 - 2023-12-05

- 2.1.0 - 2023-12-05

- 2.0.1 - 2023-10-25

- 2.0.0 - 2023-10-17

- 1.1.0 - 2023-3-18

- 1.0.0 - 2023-1-11

- Pre-1.0.0

Frontend (0232c32) - 2024-04-05

Changed (frontend)

- (February/March) Jesper has updated a lot of content, including most of the commission photos

- (March) Theme has been updated from winter to spring

- (March) Records have been updated

Added (frontend)

- A new “Pas aan” feature has been added for admins to manage the klassementen. This makes use of the 2.2 backend update.

- It allows you to start new classifications

- You can select classifications and modify their start, end and freeze date

- You can make the database recompute the points based on whether or not you want to show the points added after freezing

- Members can see when an event was last added and when it was frozen

- (February) An Oud Leden Dodeka (OLD) page has been added

2.2.1 - 2024-01-10

Tip heeft code aan deze release code bijgedragen.

Released into production on 2024-01-11.

Deploy

- Fix production image version

2.2.0 - 2024-01-10

Note: this version was not released into production.

This is the first full tidploy release on the backend with the mailserver moved to a dedicated provider. This should make all required features for the classifications fully work.

Senne en Tip hebben code aan deze release bijgedragen. We are now at 591 commits for the backend (including the 150+ commits that were from the deployment repository).

Added (backend)

<server>/admin/class/get_meta/{recent_number}/(this returns the most recent classifications of each type, recent_number/2 for each type)<server>/admin/class/new/(creates a new points and training classification)<server>/admin/class/modify/(modifies a classification)<server>/admin/class/remove/{class_id}(removes classification with id class_id)<server>/member/get_with_info/{rank_type}/(Will replace<server>/members/class/get/{rank_type}/, but added with different name to avoid breaking change, this also returns last_updated and whether the classification is frozen)- The last_updated column is now updated when new events are added.

- (Senne)

<server>/admin/update/traininghas been added (not yet in use by frontend) as the start of the training registration update. - New

update_by_uniquestore method for updating an entire row based on a simple where condition.

Internal (backend)

- The backend and deployment repository have been merged together, making deployment and versioning a lot easier. We now use a bunch of Nu scripts instead of shell scripts and the deployment will now use

tidploy. This done in a series of commits from 4602abd to around 414bc23. - Logging has been much improved throughout the backend, so errors can now be properly seen. Error handling has also been improved (especially for the store module). Some common startup errors now have better messages that indicate what’s wrong. Documentation has been improved and all database queries now properly catch some specific errors. See #31.

2.1.1 - 2023-12-05

Tip heeft code aan deze release code bijgedragen.

Released into production on 2023-12-05.

Fixed (backend)

- Remove debug eduinstitution value during registration

2.1.0 - 2023-12-05

Note: this version was not released into production.

Matthijs en Tip hebben code aan deze release bijgedragen.

Stats

We are now at 359 commits on the backend and 922 commits on the frontend.

Added (frontend)

- An explanation on the classification has been added to the classification page.

- There is now an NSK Meerkamp role.

Fixed (frontend)

- Last names are no longer all caps on the classification page and are shown in full.

Added (authpage)

- Add Dodeka logo to all pages, using new

Titlecomponent.

Changed (authpage)

- Use flex layout to make alignment better when pressing “forgot password”, so no the layout doesn’t jump slightly

- Update node version to v20

- Update dependencies

Fixed (authpage)

- Educational institution is now recorded if not changed from default (TU Delft)

Added (backend)

- Admin: Synchronization of the total points per user and events, as well as a more consistent naming scheme for the endpoints. All old endpoints are retained for backwards compatibility. Furthermore, admins can now request additional information about events on a user, event or class basis (see the PR).

<server>/admin/class/sync/(Force synchronization of the total points per user, without having to add a new event)<server>/admin/class/update/(Same as previous<server>/admin/ranking/update, which still exists for backwards compatibility)<server>/admin/class/get/{rank_type}/(Same as previous<server>/admin/classificaiton/{rank_type}/, which still exists for backwards compatibility)<server>/admin/class/events/user/{user_id}/(Get all events for a specific user_id, with the class_id and rank_type as query parameters)<server>/admin/class/events/all//(Get all events, with the specific class_id and rank_type as query parameters)<server>/admin/class/users/event/{event_id}/(Get all users for a specific event_id)

- Member: Only renames, as described above.

<server>/members/class/get/{rank_type}/(Same as previous<server>/members/classificaiton/{rank_type}/, which still exists for backwards compatibility)<server>/members/profile/(Same as previous<server>/res/profile, which still exists for backwards compatibility)

Changed (backend)

- Types: The entire backend now passes mypy’s type checker (see the PR)!

- Better context/dependency injection: The previous system was not perfect and it was still not easy to write tests. Lots of improvements have been made, utilizing FastAPI Depends and making it possible to easily wrap a single function call to make the caller testable. See #64, #65, #70 and #71.

- Better logging: Logging had been lackluster while waiting for a better solution. This has now arrived with the adoption of loguru. Logging is now much more nicely formatted and it will be easily possible in the future to collect and show the logs in a central place, although that is not yet implemented. Some of the startup code has also been refactored as part of the logging effort.

- Check for role on router basis: For certain routers, we now check whether they are requested by admins or members for all routes inside the router, making it harder to forget to add a check. The header checking logic has also been refactored and some tests have been added. Much better than the manual

ifcheck we did before. This also includes some minor refactor and fixes for access token verification. - There are now different router tags, which makes it easier to find all the different API endpoints in the OpenAPI docs view.

Fixed (backend)

- An error is no longer thrown on the backend when a password reset is requested for a user that does not exist.

Internal (backend)

- Live query tests: in the GitHub Actions CI we now actually run some tests against a live database using Actions service containers. This means we can be much more sure that we did not completely break database functionality after passing the tests. PR

- Add request_id to logger using loguru’s contextualize

- Added logging to all major user flows (signup, onboard, change email/password), also allowing the display of reset URL’s etc. so email doesn’t have to be turned on during local development

2.0.1 - 2023-10-25

Tip heeft code aan deze release bijgedragen.

Released into production on 2023-10-25.

Fixed (backend)

- Fix update email: If you requested an email change twice, but only confirmed this after they were both sent, it is no longer to change it twice. After changing it using either one, the other one is invalidated.

- Changed package structure so it is possible to extract the schema package and load it on the production server to run database schema migrations.

2.0.0 - 2023-10-17

Note: this version was not released into production.

Leander, Matthijs en Tip hebben code aan deze release bijgedragen.

Added (backend)

- Admin: Roles, using OAuth scope mechanism, as well as classifications stored in the database, computed based on each event.

<server>/admin/scopes/all/(Get scopes for all users)<server>/admin/scopes/add/(Add scope for a user)<server>/admin/scopes/remove/(Remove scope for a user)<server>/admin/users/ids/(Get all user ids)<server>/admin/users/names/(Get all user names, to match for rankings)<server>/admin/ranking/update(Add an event for classifications)<server>/admin/classification/{rank_type}/(See current points for a specific classification)

- Member:

<server>/members/classification/{rank_type}/(See current points for a specific classification, changes hidden after certain point)

Added (frontend)

- Member -> Classification page

- Admin -> Classification page and Add new event

- Roles can be changed in user overview

Changed (backend)

- Major refactor of backend code, which separates auth code from app-specific code

- Updated some major dependencies, including Pydantic to v2

- Database schema update:

classificationstable added to store a classification, which lasts for half a year and can be either “points” or “training”.class_eventstable added, which stores all events that have been held (borrel, NSK, training, …). Possibly related to a specific classification.class_event_pointstable added, which stores how many points a specific user has received for a specific event. In general, users will have the same amount of points per event, but this flexibility allows us to change that later.class_pointstable added, which stores the aggregated total points of a user for a specific classification. When an event is added, this table should be updated using the correct update query.

Changed (postgres)

- Updated from Postgres 14 to 16

Changed (redis)

- Updated from Redis 6.2 to 7.2

1.1.0 - 2023-3-18

Released into production.

Changed (backend)

- Update dependencies, including updating Python to 3.11 and SQLAlchemy 2

Fixed (server)

- Docker conatiner no longer accumulates core dumps, crashing the server after 1-2 weeks

1.0.0 - 2023-1-11

Initial release of the FastAPI backend server, PostgreSQL database and Redis key-value store. Released into production on 2023-1-11.

Added (backend)

- Login: Mostly OAuth 2.1-compliant authentication/authorization system, using the authorization code flow. Authentication is done using OPAQUE:

<server>/oauth/authorize/(Authorization Endpoint initialize)<server>/oauth/callback/(Authorization Endpoint after authentication)<server>/oauth/token/(Token Endpoint)<server>/login/start/(Start OPAQUE password authentication)<server>/login/finish/(Finish OPAQUE authentication)

- Registration: Registration/onboarding flow, which requires confirmation of AV`40 signup.

<server>/onboard/signup/(Initiate signup, board will confirm)<server>/onboard/email/(Confirm email)<server>/onboard/confirm/(Board confirms signup)<server>/onboard/register/(Start OPAQUE registration)<server>/onboard/finish/(Finish OPAQUE registration and send registration info)

- Update: Some information needs to be updated or changed.

<server>/update/password/reset/(Initiate password reset)<server>/update/password/start/(Start OPAQUE set new password)<server>/update/password/finish/(Finsih OPAQUE set new password)<server>/update/email/send/(Initiate email change)<server>/update/email/check/(Finish email change after authentication)<server>/update/delete/url/(Delete account start, create url)<server>/update/delete/check/(Confirm deletion after authentication)

- Admin: Get information only with admin account.

<server>/admin/users/(Get all user data)

- Members: Get information for members.

<server>/members/birthdays/(Get member birthdays)

Added (authpage)

- Login page

- Registration page

- Other confirmation pages necessary for backend functionality

Added (frontend)

- Profile page

- Leden page -> Verjaardagen

- Admin page -> Ledenoverzicht

- Use React Query for getting data from backend

- Use Context from React for authentication state

- Redirect pages necessary for OAuth

- Confirmation pages for info update

Added (server)

- Docker container that contains the FastAPI backend server, as well as static files for serving the authpage.

Added (postgres)

- Docker conatiner with PostgreSQL server

Added (redis)

- Docker container with Redis server

Pre-1.0.0

The frontend went live in June 2021 and before the release of the backend, was regularly updated using a rolling release schedule. The frontend is not versioned.

Hoe gebruik je de website?

Ben je bestuur, en wil je iets over de adminfunctionaliteiten weten? Klik hier.

Ben je lid van D.S.A.V. Dodeka en wil je meer weten over wat je op de website kan doen? Dan kun je op de volgende pagina’s kijken:

Ben je lid van een commissie en wil je weten hoe je de website kunt gebruiken? Dan kun je bij de volgende onderwerpen terecht. Staat wat je wil er niet bij, neem dan contact met ons op. Er is veel mogelijk!

Account aanmaken

OUTDATED: dit verwijst nog naar de oude backend/login

Een account aanmaken begint bij de Word lid!-pagina op de website.

Druk op de “Schrijf je in!” knop. Dit opent een scherm waar je wat basisinformatie aan ons kunt doorgeven. Je kunt je alleen inschrijven als je akkoord gaat met het privacybeleid, want we moeten informatie opslaan als je inschrijft bij Dodeka.

Na het invullen van je gegevens, druk je op “Schrijf je in via AV`40”. AV`40 is onze moedervereniging. Als je lid wordt bij Dodeka, word je ook lid van AV`40. De officiële ledenadministratie loopt ook via AV`40, dus daarom wordt je doorverwezen naar hun inschrijfpagina.

Het is belangrijk dat je onderaan de keuze “Ik wil lid worden van DSAV Dodeka (de studentenatletiekvereniging van AV’40 Delft)” aanvinkt.

Na het inschrijven heb je waarschijnlijk al een e-mail ontvangen van comcom@dsavdodeka.nl, met de vraag om je e-mail te bevestigen.

Klik op deze button om aan ons systeem te laten weten dat je e-mail klopt. Hierna zal je even moeten wachten, want het bestuur moet jouw aanmelding op onze website koppelen aan de ledenadministratie van AV`40. Zodra zij dit hebben gedaan, zul je bericht krijgen om je officieel te registreren op onze website. Dit zal verstuurd worden naar de e-mail die je hebt opgegeven op onze website (dus niet bij AV`40).

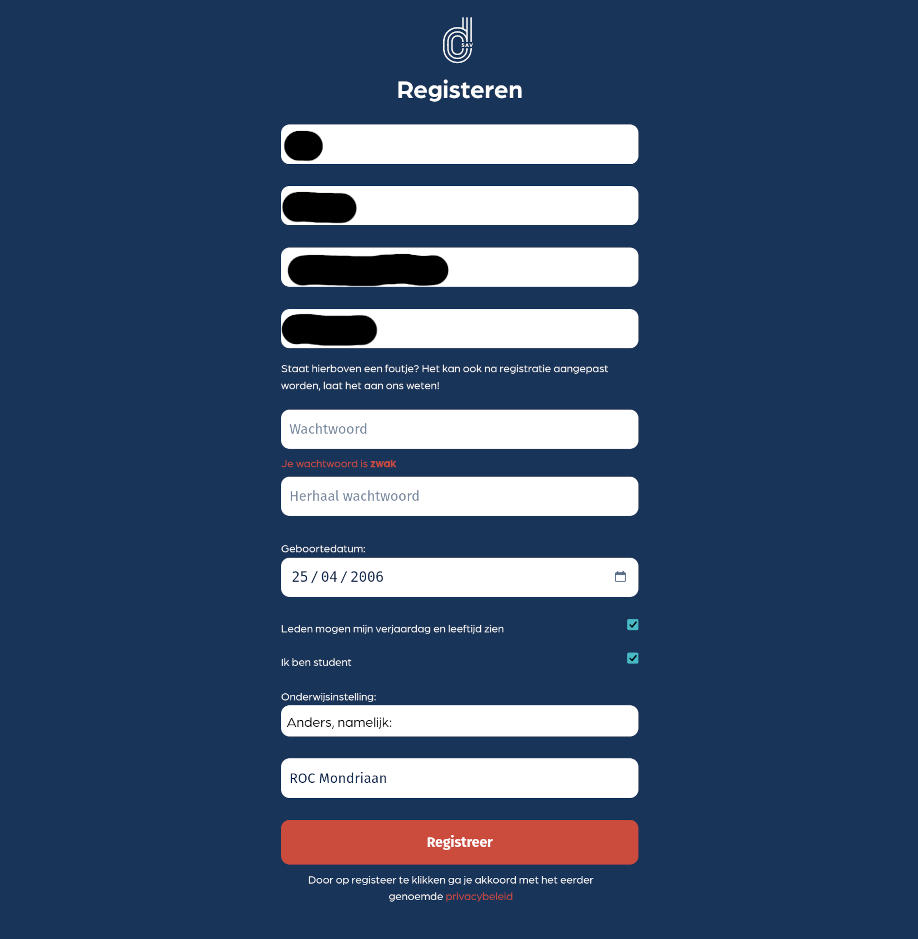

Registreren

In de e-mail vind je een link om je officieel te registreren op onze website. Dit is verplicht als je wil trainen.

Je kunt toestemming verlenen om aan andere leden je verjaardag en leeftijd te laten zien. Wie weet krijg je wel een heleboel felicitiaties!

Na het drukken op “Registreer” word je doorverwezen naar de website, waar je vervolgens kunt inloggen door rechtsboven op het icoontje te drukken.

Leuke pagina’s

Klassementen

Records

Commissiemail

OUTDATED: We gebruiken nu mail direct van Google

We zijn recent overgestapt op een nieuwe provider, https://junda.nl.

Verander je wachtwoord

Via webmail.dsavdodeka.nl kun je het e-mailwachtwoord aanpassen. We raden het sterk aan om het wachtwoord gegeven door de .ComCom aan te passen! Dit doe je bij “Edit Your Settings -> Password & Security”. Als je het hebt aangepast, moet je de onderstaande instructies opnieuw uitvoeren (dus zowel bij ‘Mail sturen als’ en ‘E-mail bekijken’ moet je het opnieuw instellen).

Stappenplan

Gebruikt je commissie al een @dsavdodeka.nl adres?

Gebruik je het via Gmail?

Vraag het nieuwe wachtwoord op door een appje aan de .ComCom te sturen. Stel het opnieuw in, zie de instructies hier.

Gebruik je het via mail.dsavdodeka.nl?

Vraag het nieuwe wachtwoord op door een appje aan de .ComCom te sturen. Ga dan naar webmail.dsavdodeka.nl, dat is de nieuwe plek waarbij je altijd bij je mail kunt! We raden je aan om het via Gmail te gebruiken, dat werkt over het algemeen wat fijner. Maak hiervoor een Gmail account aan (bijv. dies.dodeka@gmail.com) en volg de instructies hieronder.

Gebruik je nu een Gmail account?

Dan wordt het tijd over te stappen naar een @dsavdodeka.nl! Je kunt wel gewoon dezelfde inbox via Gmail blijven gebruiken.

Open Gmail op je PC of laptop.

- Druk op het tandwieltje (Instellingen) rechtsboven naast je profielplaatje.

- Druk op “Alle instellingen bekijken”.

- Kies het tabblad “Accounts en import”. Het kan zijn dat dit is veranderd, je wil in ieder geval twee dingen vinden: “Mail sturen als:” en “Email bekijken uit andere accounts” (of iets vergelijkbaars).

Eerst passen we “mail sturen als” aan:

Mail sturen als:

- Druk op “Nog een e-mailadres toevoegen”

- Kies een naam. Vul het correcte @dsavdodeka.nl adres in. Bijvoorbeeld dies@dsavdodeka.nl. Dit moet het adres zijn dat je van de .ComCom hebt gekregen. “Beschouwen als alias” mag aangevinkt blijven.

- Vul voor SMPT-server in:

mail.dsavdodeka.nlmet poort 465. - Gebruikersnaam: opnieuw je e-mailadres, dus bijv. dies@dsavdodeka.nl.

- Wachtwoord: vul het wachtwoord in dat je van de .ComCom hebt gekregen (dit kan anders zijn dan je Gmail wachtwoord)

- Wijzigingen opslaan. (Verder niets veranderen)

- Hierna kun je bij “Mail sturen als” 2 opties zijn. Druk op “als standaard instellen” bij je nieuwe @dsavdodeka.nl adres. (bijv. dies@dsavdodeka.nl).

- Verander (als het niet als zo was) “Bij het beantwoorden van een bericht” naar “Altijd antwoorden vanaf mijn standaardadres (nu dies@dsavdodeka.nl)”

Nu zorgen we dat je ook alles binnenkrijgt op je Gmail.

E-mail bekijken uit andere accounts:

- Druk op “Een e-mailaccount toevoegen”

- Daarna “E-mail importeren uit mijn andere account (POP3)”

- Gebruikersnaam: Je hele e-mailadres (bijv. dies@dsavdodeka.nl)

- Wachtwoord: vul het wachtwoord in dat je van de .ComCom hebt gekregen (dit kan anders zijn dan je Gmail wachtwoord)

- Vul voor POP-server in:

mail.dsavdodeka.nlmet poort 995. - Vink “Een kopie van opgehaalde berichten op de server achterlaten.”

- Vink “Altijd een beveiligde verbinding (SSL) gebruiken wanneer e-mailberichten worden opgehaald (dit is belangrijk!)”

- -> Account toevoegen

Test of het werkt door een e-mail naar ander adres te versturen en naar je @dsavdodeka.nl account te versturen. Als het niet werkt, neem contact op met de .ComCom.

Bestuur

OUTDATED: dit verwijst nog naar de oude backend/login

Een aantal administratieve taken zijn via de website te regelen. De belangrijkste zijn:

De records zijn nog niet aan te passen via de admintool. Daar zijn we nog mee bezig. Neem contact op om ze handmatig aan te laten passen.

Nieuw lid accepteren

Update klassement

Rollen

Vragen en suggesties

.ComCom

Commissie

De huidige commissie is:

- Tijmen Hoedjes (voorzitter, o.a. QQ B6)

- Xylander Parqui (QQ B7)

- Ymme van Komen

- Mei Chang van der Werff

- Anne-Wil van Werkhoven

- Jefry el Bhwash

Geschiedenis

Voormalige leden (op volgorde van commissie verlaten):

- Matthijs Arnoldus

- Jefry el Bhwash (o.a. QQ B4)

- Laura Geurtsen

- Donne Gerlich (QQ B2)

- Nathan Douenburg

- Tip ten Brink

- Aniek Sips (QQ B3)

- Pien Abbink

- Senne Drent

- Leander Bindt

- Sanne van Beek (QQ B5)

- Matthijs Arnoldus (is een recordaantal aantal van 5 commissiejaren lid geweest)

- Tip ten Brink

- Liam Timmerman

- Jesper van der Marel

- Sanne Diepen

De commissie is opgericht in bestuursjaar 2 (2020/2021), door Matthijs, Jefry, Laura en Donne. Met Nathan als designer hebben ze in korte tijd een hele mooie website in elkaar gezet, die live ging in 2021. Dat jaar werd ook de eerste 24-uursvergadering gehouden. De website was vooral een bron van informatie en een uithangbord voor de vereniging, en natuurlijk het thuis van de Spike. Daarnaast was de .ComCom verantwoordelijk voor de e-mail.

De website was statisch, wat betekent dat er geen server was die data kon opslaan en dat je dus ook niet in kon loggen. Tip werd lid van Dodeka en ook meteen lid van de .ComCom in 2021/2022 en ging hard aan de slag om een “backend” te bouwen, waarmee wel ingelogd zou kunnen worden. Ook Pien werd lid en hielp mee met het bouwen van nog meer mooie pagina’s.

Dit project ging live in januari 2023. Dat jaar krijgen we ook een nieuw lid, Leander, die nieuwe functies maakt voor de backend. Ondertussen waren Matthijs en Pien hard bezig om de content te onderhouden, iets wat een steeds grotere taak werd.

2023/2024 zag veel leden komen en gaan, terwijl de content beter dan ooit werd onderhouden door o.a. Jesper. Achter de schermen werd gewerkt aan de trainingsinschrijving, maar er ging bijvoorbeeld ook een systeem voor de klassementen live. Jefry, lid van het eerste uur, nam helaas afscheid. Met zijn opgedane kennis lukte het hem om voorzitter te worden van de Lustrumcommissie.

Wat kun je doen leren?

Bij de .ComCom is enorm veel te doen en dus natuurlijk ook heel veel te leren. De taken kunnen in de volgende vier belangrijke delen worden opgesplitst:

- Design: de website moet er natuurlijk mooi uitzien. Dat is echter niet het enige, want de website moet ook gebruiksvriendelijk zijn. De user interface (UI), dus hoe de gebruiker omgaat met de website, en de user experience (UX), dus de ervaring van de gebruiker, moeten ook top zijn. UI/UX design is dus misschien nog wel belangrijker en daar is dus enorm veel over te leren.

- Content: de website bevat naast nieuws belangrijke informatie over o.a. hoe je lid wordt en wanneer de trainingstijden zijn, ook bijvoorbeeld informatie over huidige en toekomstige wedstrijden en activiteiten. Die moeten constant geüpdate worden. De website is een uithangbord voor de vereniging en speelt een belangrijke rol bij het aantrekken van nieuwe leden. Dit maakt de “content” (de tekst en plaatjes) erg belangrijk. Je zult expert worden in het doorspitten van de FOCUS-archieven en het schrijven van tekstjes.

- Programmeren: een groot deel van het werk dat bij de .ComCom wordt gedaan, is inderdaad simpelweg programmeren. Het is wel best anders dan je misschien gewend bent bij de vakken die je op de uni volgt. Het zijn namelijk geen Python plotjes of algoritmes in Java. Het gaat hier om het bouwen en onderhouden van een grote applicatie (die ondertussen 3+ jaar oud is) met meerdere componenten. Hieronder geven we nog meer detail.

- Systeemadministratie: dit is niet het meest sexy taakje, maar wel heel belangrijk. We kunnen namelijk ook privéinformatie. Daarnaast mogen de systemen niet zomaar uitvallen en moeten we de juiste keuze maken tussen aanbieders van servers.

Programmeren

Programmeren, coderen, developen, er zijn veel woorden voor wat wij doen. Je kunt het zelfs software engineering of architecture noemen. De volgende dingen bouwen wij allemaal:

- Een website, de “frontend”, (JavaScript, HTML, CSS) met het React framework. Naast statische pagina’s komen er ondertussen steeds meer dynamische pagina’s bij, die up-to-date informatie ophalen van een server, die vervolgens moet worden gemanipuleerd. Daarnaast moet worden bijgehouden of je wel of niet bent ingelogd en welke onderdelen van de website je kunt zien. Het bestuur moet een tabel kunnen zien met informatie over alle leden, en dit aan kunnen passen. Als data verandert op de server, moet je dat ook meteen kunnen zien.

- Een server die een toegankelijk is via een API, de “backend” (geschreven in Python), die reageert op aanvragen (requests) van de website. Deze slaat alle wachtwoorden op in een vorm die wij niet kunnen lezen en kan bewijzen of je echt bent wie je zegt dat je bent. Daarnaast moet de backend informatie op kunnen halen uit een database. Hiervoor gebruiken we SQLite.

Systeemadministratie

- Beheren van de server zelf (Ubuntu Linux), updates uitvoeren, de toegang veilighouden.

Overview

The technical information is divided into four parts:

- Setup: which only teaches how to get everything running on your local machine, so you can dive into the code and start developing right away.

- Architecture: a deeper look at the architecture of the entire application, detailing why we made certain decisions and explaining things on a higher-level than you would find by looking at the comments and documentation in the source code.

- Developing: details what kind of things you need to modify if you want to make changes. Contains tips on how to easily do actualy ‘development’, what files matter most, among other things.

- Deployment: once you have developed something, it needs to actually go live. This section details all the steps you have to go through to deploy the code into the real world, how to administer the servers and related tasks.

Definitely look at Setup, and also at Deployment if you actually do an update. Otherwise, Developing should be what you look at next. Architecture is only necessary when you want to make big changes or understand things better.

Setup

Here you can find information on how to set everything up, both the frontend, backend and database, so you can start developing right away.

No matter what you do, you’ll need to install Git, so check out the instructions for that.

If you are only doing things on the frontend, all you need to know is how to setup the frontend.

If you are developing the backend, you will probably want to also test things on the frontend, but you will definitely need to setup first the databases locally using a tool called Docker, and then you can setup the backend application itself.

Git

Terminal cheat sheet

# See what branch you are on and what changes you have.

git status

Installation

To share code with each other, we use a program called Git. We use Git to download the “online” version of the source code (which is hosted on GitHub) so that we can work on it locally on our own machines. We also use Git to again upload it to the server.

For an in-depth guide to Git, checkout the Pro Git book. For an overview of the most important commands, checkout GitLab’s cheat sheet and this other one. Want to perform some specific action? Check out Git Flight Rules. Also, ChatGPT is pretty good at Git nowadays.

You can also use a GUI client instead of the command line (although I nowadays recommend against that), such as the one integrated into Visual Studio Code (with an extension such as GitLens), GitKraken or GitHub Desktop.

But first, you’ll need to install Git itself. On Windows, use the link at the top here (the 64-bit standalone installer). On Linux, use your system package manager (instructions here). On macOS, use HomeBrew.

For the Windows installer, I recommend against adding the links to your context menu (so disable Windows Explorer integration). Git Bash Profile for Windows Terminal can be useful (if you’ve installed it). As default editor I recommend something like Notepad++ or Notepad. For the rest the default options should be okay. Be sure to use the recommended option for “Adjusting your PATH environment”.

GUI

If you’re using a GUI program (so GitKraken, VS Code or GitHub Desktop), use their documentation to login to GitHub (make sure you have an account). Go to the frontend or backend for further instructions.

Command line (recommended)

Why do I recommend using the command line? Because a lot of developer tools work with it exclusively. Because it’s simple to develop one, they usually have the most features and offer you the most control. At the same time, they also usually allow you to make more mistakes and can have a steeper learning curve, although ChatGPT has made things a lot easier nowadays.

To be able to download and upload repositories, you’ll still need to login to GitHub. For that, I recommend using the gh CLI. For Windows, I recommend just using the installer. The current version (as of December 2024), can be found here. To find newer versions, go to the releases page and download what’s most similar to “GitHub CLI 2.63.0 windows amd64 installer” (amd64 means x86-64, if you’re using an ARM chip you should install using WinGet, see here). For general installation instructions, see here.

If you haven’t already, I recommend installing “Windows Terminal”, which is much, much better than the standard Command Prompt. You can find it on the Microsoft Store (if the link doesn’t work, just search for Windows Terminal). You might also want to install PowerShell 7.

Once you have gh installed (you might need to restart your terminal), run gh auth login and follow the steps to login to your GitHub account (make sure you have one!). Finally, go to the frontend or backend for further instructions.

Frontend setup

For more information on developing the frontend, see the section on developing the frontend.

Setting up the frontend is the easiest. The frontend is entirely developed and deployed from the DSAV-Dodeka/DSAV-Dodeka.github.io repository. Like in the other pages, we will assume some familiarity with Git and GitHub and have Git installed (see the guide on Git).

Before you start, find some location on your computer where you want to store all the files. Try and put it somewhere you can easily find it again. For example, make a folder called “Git” in your “Documents” folder.

Next, be sure you have access to a terminal. Windows Terminal, which you can download from the Microsoft Store, is highly recommended. Once you have that installed, go to the “Git” folder or whatever location you chose, and right-click. It should then have a “Open in Terminal” option.

Otherwise, you can type Ctrl+L and then copy the file path (using e.g. Ctrl+C). This could be a path like

C:\Users\tip\Documents\Git(where “tip” is replaced by your username). Then, just open Windows Terminal (just search for it on your computer), and typecd "<path>", where<path>is the path you just copied, so in my case that would becd C:\Users\tip\Documents\Git.

You now have that folder open in your terminal, it should say something like:

PS C:\Users\tip\Documents\Git >

Ensure you are in that folder for the next part!

Downloading the code

The next step is to clone the repository to your computer. Because we store all our images inside the repository, the full history contains countless copies of large images. In the past, we didn’t properly optimize them so sometimes there were multiple versions of very large images. Thankfully, you don’t have to download the full history. Instead, when cloning, run the following command:

git clone https://github.com/DSAV-Dodeka/DSAV-Dodeka.github.io.git dodekafrontend --filter=blob:none

The --filter=blob:none option executes a “partial clone”, in which all blobs (so the actual file contents) of old commits are not downloaded. They are only downloaded once you actually switch to a commit. This saves a lot of disk space on your computer and makes the clone much faster.

After this is done, a new folder called dodekafrontend will have appeared. This is where all the code is located! I recommend editing the code by using Visual Studio Code and then opening that folder.

Installing NodeJS

The next step is to install fnm (https://github.com/Schniz/fnm), which will help us install NodeJS, which is a JavaScript runtime (a program that runs JavaScript code) based on the same internal engine as Google Chrome.

Open Windows Terminal (install it from the Microsoft Store if you don’t have it) and do this:

winget install Schniz.fnm

Open a new PowerShell terminal and run the following command:

if (-not (Test-Path $profile)) { New-Item $profile -Force }

This creates a “profile” file that will we then edit with the following command:

Invoke-Item $profile

Then, at the end of the file, copy-paste the following:

# Fast Node Manager to run NodeJS

fnm env --use-on-cd --shell powershell | Out-String | Invoke-Expression

Then, open a new PowerShell window and run:

fnm use 24

If it asks for yes or no, type “y” and press enter to install. This will actually install version 24 of NodeJS. Then, once you open a new terminal with PowerShell and type node -v, it should respond something like v24.x.x (where x.x can be any number).

Check https://github.com/Schniz/fnm for more instructions if something does not work, or feel free to ask ChatGPT. Otherwise, you can always ask Tip for help.

Installing dependencies

Next, open the command line in the root folder of the project. This can be easily done by opening it in a IDE (integrated development environment, I recommend using VS Code) and then opening a terminal there. Or, you can do what we did earlier (open it in file explorer, right-click or use Ctrl+L to get the path). Remember, open the dodekafrontend folder, not the Git folder or wherever you put it. Then, to install all dependencies, run:

npm install

Running the website locally

Once this is done, we can actually run the website using the command:

npm run dev

Under the hood, this will use Vite to bundle and build our project into actual HTML, CSS and JavaScript that our browser can run. The command will start a dev server, which will allow you to access the website in your browser using something like localhost:3000 (the port, 3000 in this case, might be different).

Backend setup

OUTDATED: not yet correct for new backend

NOTE: the backend is now developed from the dodeka repository, in the backend subdirectory!

Before you can run the backend locally, you must have a Postgres and Redis database setup. Take a look at the database setup page for that.

Run all commands from the dodeka/backend folder!

- Install

uv. uv is like npm, but then for Python. I recommend using the standalone installer. - Then, set up a Python environment. Use

uv python installinside the./backenddirectory, which should then install the right version of Python, or use one you already have installed. - Next, sync the project using

uv sync --group dev(to also install dev dependencies). This will also set up a virtual environment at./.venv. - Then I recommend connecting your IDE to the environment. In the previous step

uvwill have created a virtual environment in a .venv directory. Point your IDE to that executable (the file namedpythonorpython.exein.venv/bin) to make it work. - Currently, the

apiserverpackage is in a /src folder which is nice for test isolation, but it might confuse your IDE (if you use PyCharm). In that case, find something like ‘project structure’ configuration and set the /src folder as a ‘sources folder’ (or similar, might be different in your IDE). - You might want some different configuration options. Maybe, you want to test sending emails or have the database connected somewhere else. In that case, you probably want to edit your

devenv.toml, which contains important config options. However, this means that when you push your changes to Git, everyone else will get your version. If their are secret values included, those will be publicly available on Git as well! Instead, create a copy ofdevenv.tomlcalleddevenv.toml.localand make changes there. Now, Git will know to ignore this file. - Now you can run the server either by just running the

dev.pyin src/apiserver (easiest if you use PyCharm) or by runninguv run backend(easiest if you use VS Code). The server will automatically reload if you change any files. It will tell you at which address you can access it.

Running for the first time

If you are running the server for the first time and/or the database is empty, be sure to set RECREATE=“yes” in the env config file (i.e. devenv.toml.local). Be sure to set it back to “no” after doing this once, or otherwise it recreates it each time.

Architecture

This section describes all the different components that make up the website and how they interact. It describes why we made certain choices, which tools and frameworks we use, and more.

Frontend vs Backend

What is a “frontend”, what is a “backend”, why do we have this separation?

First, what is the difference? There is no perfect definition, but in general the “frontend” is the part that is exposed to the end user, so what they actually see and interact with. So the “frontend” is about the user, it’s what actually runs in the browser.

The “backend” is the part that cannot run in the browser, for example because it needs to have dynamic access to the database. It runs on a server, away from the user. It’s job is to store things that need to be secure not visible to everyone, like passwords or personal information. For that, it needs a database.

To use the backend, it exposes a so-called “API”, which is basically a list of functions which can be called from the internet. In particularly, it mostly adheres to the principles of a RESTful API (there are many resources on the internet about it).

In general, when the frontend wants data, it sends a JSON request (a specific format used for structuring data) … #TODO

There are two main reasons. The first, more technical one, is that by separating the frontend from the backend, you can develop them separately. So someone who wants to update how something looks, doesn’t have to worry about any logic that should happen on the server. This allows teams to work more independently. It’s also a “separation of concerns”, which is ensures that there’s not too much tangling of functionality. It also allows fully replacing one of the two components without worrying about the other. This might be useful in the future.

However, the more important … #TODO

Developing

Prerequisites

Development isn’t scary, but it’s probably new. Lots of jargon and all kinds of tools will be thrown around. Just let it come, you can only learn by doing.

Below I’ll introduce some concepts that are necessary to understand to develop for the website. Some of it you’ll know about or heard of already.

File system

Servers

Linux

Command line

Version control / Git

Browsers / JavaScript

Package managers

NodeJS / npm

Cheatsheet

This contains the most important information to get you up and running and productive.

Git

Open the command line in the folder where you downloaded DSAV-Dodeka.github.io / open the terminal in VS Code (Ctrl+`)

General workflow

# go to the main branch

git checkout main

# update the repository

git pull

# go to a new branch (replace branchname with your desired name, no spaces or capital letters allowed)

git switch -c "branchname"

# add all edited files to future commit

git add -A

# commit the changes (change the description to something useful)

git commit -m "commit description"

# upload changes to github.com (replace 'branchname' with what you used earlier)

# in case you already pushed this branch before, you can just do git push

git push --set-upstream origin branchname

Status

See the current status (shows what branch you are on)

git status

Go to a branch

If you want to go to a particular branch, say ‘branch-xyz’, do:

git checkout branch-xyz

Update a branch

If you want to update your current branch with changes on github.com:

git pull

If you have changes locally, this might not work.

Delete all local changes (BE CAREFUL)

If you did some stuff you don’t know how to revert, but also don’t care to save it, do (be careful!):

git reset --hard

Frontend

Open the command line in the folder where you downloaded DSAV-Dodeka.github.io / open the terminal in VS Code (Ctrl+`)

Run the website locally

npm run dev

The website is now available in your browser at http://localhost:3000.

Update dependencies

npm install

Frontend development

For more in-depth details on why certain decisions were made, see the architecture section.

The frontend development can be divided roughly into three sections:

- Updating the content (don’t modify pages, just the text and images). See here. For information on images and how to optimize all images, see here.

- Adding new static pages (design-focused page design). See here.

- Creating dynamic pages (and integrating them with the backend). See the section in the backend here.

React and React Router

OUTDATED: not yet up to date for new React Router setup

We use a concept called “client-side routing”, which means that code on the page itself sends you to the subpages. This is also known as a “single-page application”. For more details, see the section on architecture.

Routes

Define a new route

To define a route, add a an element inside the <Routes> element in src/App.tsx:

<Route path="/vereniging" element={<Vereniging />} />

Here <Vereniging /> is the React component that consists of the entire page. You will need to import it from the right file. Every single page, also every subpage, needs a separate route like this. path="/vereniging" indicates the path at which the page will be visible.

Add it to the menu bar

The src/components/Navigation Bar/NavigationBar.jsx file contains all the different menu items. Don’t forget to add it to both the navItems and the navMobileContainer.

.tsx vs .jsx?

For new components, prefer .tsx, which ensures proper TypeScript checking happens, which can make development easier by providing hints about what properties are available and also prevents bugs.

Authentication

We use React Context to make the authState available everywhere. Inside the component, simply put:

const {authState, setAuthState} = useContext(authContext)

Then, by checking authState.isLoaded && authState.isAuthenticated you can check whether someone has been authenticated as a member and whether the route should be available. Note: any data that you store on the frontend is publicly available (either through the source code, but also using ‘inspect element’ in the browser)! So any sensitive data should be stored in the backend and retrieved using requests. See the section on integrating the backend and frontend.

You can use authState.scope.includes("<role>") to check if someone has a role, but remember someone can just edit this code in the browser. So any information available on pages stored in the frontend repository should nto be sensitive. So it’s fine to simply display the page skeleton based on checking the authState, but don’t show private data based on that.

Content

The content can be found primarily in ./src/content. There you can find many JSON files. JSON (JavaScript Object Notation) is a format that can easily be read by a machine. In the actual pages, we import these files and then read them, putting the text actually on the website.

The images can be found in ./src/images. Again, we import these images on pages using the getUrl function.

Images

Optimizing images

This script only works on Linux (or WSL).

Dependencies

-

img-optimize - https://virtubox.github.io/img-optimize/ (

optimize.sh) -

imagemagick - https://imagemagick.org/script/download.php (

convert) -

jpegoptim

-

optipng

-

cwebp

The last 3 can be installed on Debian/Ubuntu using:

sudo apt install jpegoptim optipng webp

Once you’ve downloaded the first script, run the following script from the img-optimize main folder (be sure to replace <DSAV-Dodeka repository location> by the correct path):

#!/bin/bash

# Script by https://christitus.com/script-for-optimizing-images/ (Chris Titus)

# Modified by Tip ten Brink

FOLDER="<DSAV-Dodeka repository location>/src/images"

#resize png or jpg to either height or width, keeps proportions using imagemagick

find ${FOLDER} -iname '*.jpg' -o -iname '*.png' -exec convert \{} -verbose -resize 2400x\> \{} \;

find ${FOLDER} -iname '*.jpg' -o -iname '*.png' -exec convert \{} -verbose -resize x1300\> \{} \;

find ${FOLDER} -iname '*.png' -exec convert \{} -verbose -resize 2400x\> \{} \;

find ${FOLDER} -iname '*.png' -exec convert \{} -verbose -resize x1300\> \{} \;

# Optimize.sh is the img-optimize script

./optimize.sh --std --path ${FOLDER}

We convert the images to a size of max 2400x1300, as higher resolutions don’t make a big difference.

Backend development

OUTDATED: not yet accurate for new backend

Project structure

All the application code is inside the backend/src directory. This contains five separate packages, of which three act as libraries and two as applications.

- The

datacontextlibrary is fully standalone. It contains special logic for implementing dependency injection, which is useful for replacing database-reliant functions in tests, while keeping good developer ergonomics. Ensure it doesn’t import code from any other package! - The

storelibrary is fully standalone and provides the primitives for communicating with the databases (both DB and KV). Ensure it doesn’t import code from any other package! - The

authlibrary relies on both the datacontext and store libraries. It provides an application-agnostic implementation of all the authorization server logic. In an ideal world, the authorization server is a separate application. To still stay as close to this as possible, we develop it as a separate library. However, the library does not know about HTTP or anything like that, the routes are implemented in our actual implementation, as are some things which rely on a specific schema. - The

schemapackage contains the definition of our database schema (inschema/model/model.py). It can be extracted during deployment and then used for applying migrations, hence it is also something of an application. - The

apiserverpackage is our actual FastAPI application. It relies on all the above four packages. However, it also has some internal logic that is more “library”-like. Furthermore, to prevent circular imports among other things, there is a certain “dependency order” we want to keep. They are as follows:resources.pycontains two variables that make it easier to get the specific path, specifically import files in theresourcesfolder.define.pycontains a number of constants that are unlikely to ever change and do not really depend on what environment the application is deployed in (whether it is development, staging, production, etc.). It also contains the logic for loading things that do depend on the ‘general’ environment, but not the ‘local’ environment. As a rule of thumb, something like a website URL will always be the same for an environment, but an IP address, a port or a password might differ.env.pyloads this local configuration, which includes things like passwords and where to exactly find the database.- Then we have the

src/apiserver/libmodule, which consists mostly of logic that does not load its own data. While it might cause side effects (like sending an email), it should always cause the same side effects for the same arguments (so it should not load data). In general, most functions and logic here should be pure. More importantly, they should not import anything from thesrc/apiserver/appmodule. - Next there is

src/apiserver/data. This include all the simple functions that perform a single action relating to external data (so the DB or KV). Mostly, these functions wrapstorefunctions, but then using a specific table or schema. The most important are the functions in thedata/api, i.e. the data “API” which is the way that the rest of the application interacts with data. Insidedata/contextit also contains context functions, which should call multipledata/apifunctions and other effectful code that you wan to easily replace in test (like generating something randomly). Seedata/context/__init__.pyfor more details. - Finally, we come to

src/apiserver/app. These contain the most critical part, namely therouters, which define the actual API endpoints. Furthermore, there is themodulesmodule, which mostly wrap multiple context functions. Seeapp/modules/__init__.pyfor more details. - Next, the

app_...files define and instantiate the actual application, whiledev.pyis an entrypoint for running the program in development.

Other

Important to keep in mind

Always add a trailing “/” to endpoints.

Testing

We have a number of tests in the tests directory. To run them and check if you didn’t break anything important, you can run poetry run pytest.

Static analysis and formatting

To improve code quality, readability and catch some simple bugs, we use a number of static analysis tools and a formatter. We use the following:

mypychecks if our type hints check out. Run usingpoetry run mypy. This is the slowest of all the tools.ruffis a linter, so it checks for common mistakes, unused imports and other simple things. Run usingpoetry run ruff src tests actions. To automatically fix issues, add--fix.blackis a formatter. It ensures we never have to discuss formatting mistakes, we just let the tool handle it for us. You can usepoetry run black src tests actionsto run it.

You can run all these tools at once using the Poe taskrunner, by running the following in the terminal:

poe check

Continuous Integration (CI)

Tests (including some additional tests that run against a live database) and all the above tools are all run in GitHub actions. If you open a Pull Request, these checks are run for every commit you push. If any fail, the “check” will fail, indicating that we should not merge.

VS Code settings

VS Code doesn’t come included with all necessary/useful tools for developing a Python application. Therefore, be sure the following are installed:

- Python (which installs Pylance)

- Even Better TOML (for .toml file support)

You probably want to update .vscode/settings.json as follows:

{

"python.analysis.typeCheckingMode": "basic",

"files.associations": {

"*.toml.local": "toml"

},

"files.exclude": {

"**/__pycache__": true,

"**/.idea": true,

"**/.mypy_cache": true,

"**/.pytest_cache": true,

"**/.ruff_cache": true

}

}

This ensures that any unnecessary and files are not shown in the Explorer.

Routes

Integrating the backend/frontend

The database is the only place you can securely store private information. Everything stored in the repositories can easily be accessed by anyone. In the future, we might want to make an easier way to store private content.

So, if you want to display some secret information on the backend on the frontend, you will need to load it using an HTTP request. To make this easier, we use two libraries, TanStack Query and ky.

A query

A “query” is basically an automatic function that, once the page loads, will load whatever function you ask it to and keep it up to date. It can be enabled based on whether or not someone is authenticated.

An example of of a query is (defined in src/functions/queries.ts):

export const useAdminKlassementQuery = (

au: AuthUse,

rank_type: "points" | "training",

) =>

useQuery(

[`tr_klass_admin_${rank_type}`],

() => klassement_request(au, true, rank_type),

{

staleTime: longStaleTime,

cacheTime: longCacheTime,

enabled: au.authState.isAuthenticated,

},

);

Here, useQuery is a function from the TanStack Query library, while klassement_request is defined by us. Here it is important that the tr_klass_admin_${rank_type} key is unique, otherwise the caches will not work correctly.

Let’s look at klassement_request (inside src/functions/api/klassementen.ts):

export const klassement_request = async (

auth: AuthUse,

is_admin: boolean,

rank_type: "points" | "training",

options?: Options,

): Promise<KlassementList> => {

# ... ommitted for brevity

let response = await back_request(

`${role}/classification/${rank_type}/`,

auth,

options,

);

const punt_klas: KlassementList = KlassementList.parse(response);

# ... ommitted for brevity

return punt_klas;

};

Here, back_request is a function we defined, which calls the backend using the ky library. Basically, this is just a simple GET request, which we then parse using zod (the .parse part). The backend will check the information in the auth part, returning the data if you have the right scope and are the right user.

How is the result of this query used now? Let’s see (in src/pages/Admin/components/Klassement.tsx):

const q = useAdminKlassementQuery({ authState, setAuthState }, typeName);

const pointsData = queryError(

q,

defaultTraining,

`Class ${typeName} Query Error`,

);

The pointsData now simply contains the data you want. All the data loading happens in the background. queryError is also defined by us and ensures that any potential error is properly caught. If the data is still loading, it will display the default data instead (defaultTraining) in this case. In the future we might want to make sure this is displayed in a nicer way in the UI. Because right now, it will first show the default data, before flickering and switching to the loaded data once it comes in.

Developing the deployment setup

Deployment

This section discusses deployment, so everything to do with getting the code actually live so that the application can actually be used.

Server

Our server is hosted by Hetzner, a German cloud provider. Our server is an unmanaged Linux Ubuntu virtual machine (VM). VM means that we do not have a full system to ourselves, but share it with other Hetzner customers. We have access to a limited number of cores, memory and storage.

It is unmanaged because we have full control over the operating system. We need to keep it up to date ourselves. The choice for Ubuntu was also made by us. It has no GUI, only a command line, so getting familiar with the Unix command line is very helpful. By default, it uses the bash shell.

Webportal

The webportal for the server can be accessed from https://console.hetzner.cloud. The account we use is dsavdodeka@gmail.com. You need 2FA to log in.

The most important things you can do from the portal are:

- See graphs of CPU, disk and network load, as well as memory usage

- Manage backups

- Access to root console

Connecting: SSH

To connect to the server, we use SSH. To be able to connect, you need to have an “SSH key” configured. To add one, you must first generate a private-public SSH keypair.

Then, the public part must be added to the ~/backend/.ssh/authorized_keys file. Note, this file requires some specific permissions, so if something is not working check whether these are correct.

Currently, we have the following important SSH settings:

PermitRootLogin no

PasswordAuthentication no

This ensure you can only log in with an SSH key, not with a simple user password.

We only allow logins through the backend user (see next section), so keep /root/.ssh/authorized_keys empty.

It is recommended to not add SSH keys through the web console, as these are not easily visible inside the authorized_keys file.

If you no longer have access to any keys, use the web console to log in as root, then change user to backend su backend and edit the authorized_keys file.

Connecting

Connecting is simple, simply do ssh backend@<ip address>. If your default identity is not a key that has access, you might need to use the -i flag to select the right key on your client.

Once you have done this, you have access to the server as if it’s your PC’s own command line.

root vs backend user

To keep things safe, try to avoid using the root user as much as possible. Instead, use backend. You can use sudo to run priviledged commands and and if necessary, log in as root using su root.

Keeping it up to date

To keep the server up to date, ocassionally run:

sudo apt update

sudo apt install

Ocasionally, Ubuntu itself might also get an update. It is best to only update once there is a new LTS version.

Required packages

For running deployment scripts, three main tools must be installed, poetry, Docker (including Docker Compose) and the GitHub CLI. Make sure these are occasionally updated.

Furthermore, the server also requires nginx as a reverse proxy and certbot for SSL certificates. We use Ubuntu’s packaged nginx and we use a snap package for certbot.

File locations

Currently, the dodeka repository is in /home/backend/dodeka.

Demo

Running the auth server

CGO_ENABLED=1 go run . --insecure --env-file .env.demo --interactive --no-smtp

Running the backend

uv run --locked --no-dev backend

Staging and production

For a complete setup including backend, first ensure the containers are built using GitHub Actions for the environment you want to deploy. Then, SSH into the cloud server you want to deploy it to.

The following tools are necessary, in addition to those assumed to be installed on a standard Linux server:

- Python 3.10 (matching the version requirements of the

dodekarepository) - Poetry (to install the dependencies in the

dodekarepository) gh(GitHub CLI, logged into account with access tododekarepository, optional if authenticated by other means)nu(a shell scripting language, see also how to install.)tidploy(tool built specifically for deploying this project)bws(Bitwarden Secrets Manager CLI)

The last three tools are all Rust projects, so they can be built from source using cargo install <tool name>. However, this can be very slow on large VMs, so installing them as binaries using cargo binstall <tool name> is recommended (install binstall first by using cargo install cargo-binstall).

To deploy, simply clone this repository and enter the main directory. Make sure you have updated the repository recently with the newest deploy script versions. Then, run tidploy auth deploy and enter the Bitwarden Secrets Manager access token.

Then, you can deploy using tidploy deploy production (or tidploy deploy staging for staging). You can also use the Nu shortcut:

nu deploy.nu create production

If you want to specify the specific Git tag (i.e. a release or commit hash) to deploy, use:

nu deploy.nu create staging v2.1.0-rc.3

That’s it!

Shutdown

You can observe the active compose project using docker compose ls. Then you can shut it down by running (from the dodeka repository):

nu deploy.nu down production

If the suffix of the Docker Compose project name is different from latest, replace it with that. It will in general be equal to the tag you deployed with (which is ‘latest’ by default), but with periods replaced with underscores. For example, nu deploy.nu create staging v2.1.0-rc.3 can be shutdown with nu deploy.nu down staging v2_1_0-rc_3.

Hetzner

Preparing SSH

First we rebuild the image from Ubuntu 24.04.

We reset the root password using Rescue -> Reset root password. I recommend then changing it to a new password once inside again (through passwd root).

Login using the console GUI.

Go to /etc/ssh and change the SSH server settings sshd_config:

PermitRootLogin no

PasswordAuthentication no

Then we create a new user (-m creates home directory, then we add them to sudo group):

useradd -m backend

adduser backend sudo

Then do passwd backend and set up a password.

Switch to the user using su backend.

The default shell might not be bash (for example if your prompt starts with only ‘$’), in that case run:

chsh --shell /bin/bash

Create a new directory mkdir /home/backend/.ssh. Enter this directory (using cd) and then do nano authorized_keys to open/create a new file there.

Paste in your SSH public key (generate one using ssh-keygen -t ed25519 -C "your_email@example.com") (the public key looks something like ssh-ed25519 .... tiptenbrink@tipc) and save the file (Ctrl-X). If copy-paste is not working, maybe try a different browser and make sure you’re not in GUI mode or similar.

Then, ensure the file has the correct permissions:

chmod 700 /home/backend/.ssh && chmod 600 /home/backend/.ssh/authorized_keys

Then you can log in with ssh backend@<ip address here> (make sure it uses the proper private key, so you might have to use the -i option).

Note that it’s often had to find out what’s going on when it’s not working. Be sure that the string in authorized_keys precisely matches your public key. Note that sometimes copy-pasting can not work correctly and some characters are changed (like = to -, or _ to -) or maybe you missed one character at the beginning or end. It really needs to match!

SSH niceties

Install “xauth” (while logged in as root)

apt install xauth

If you’re using a nice terminal emulator, you might have to add some xterm files. Consult the documentation of your terminal for details.

From this point on we never need to be logged in as root anymore! Always log in via ssh from your terminal

Dependencies

Update packages

sudo apt update

sudo apt upgrade

You might have to reboot after this: reboot.

Install basic C compiler and other useful packages

sudo apt install unzip build-essential

Install NodeJS

Using fnm:

curl -o- https://fnm.vercel.app/install | bash

Be sure to re-login/start new terminal.

Set-up NodeJS 24:

fnm use 24

Verify with node -v, should return something starting with v24.

Install Python (with uv)

First, we install uv:

curl -LsSf https://astral.sh/uv/install.sh | sh

Then install Python (free-threading build) with:

uv python install 3.14t

Install Go

Go here to get the latest version (for linux and amd64/x64):

https://go.dev/doc/install

Currently that would be go1.25.5.linux-amd64. Then download it like:

curl -OL https://go.dev/dl/go1.25.5.linux-amd64.tar.gz

Then put it in the install location with:

sudo rm -rf /usr/local/go && sudo tar -C /usr/local -xzf go1.25.5.linux-amd64.tar.gz

Finally, add go to your path by appending the following to the end of ~/.profile:

export PATH="$PATH:/usr/local/go/bin"

Install backend (and frontend)

We did not strictly need to install NodeJS and the frontend as we will not host the frontend ourselves. However, I will explain how to here, since that can be useful as a demo.

Frontend

First, let’s clone the frontend to the home directory.

git clone https://github.com/DSAV-Dodeka/DSAV-Dodeka.github.io.git frontend --filter=blob:none

Set up reverse proxy

Installing Caddy

We will install Caddy. Go to their docs for the precise command (the one with stable and containing sudo apt update among other things).

Caddyfile

Permissions

The Caddy server runs as the caddy user and if you want to run the demo using a file server, you will have to give it permission to access the files you generate when building the frontend. Therefore, we will create a new group and add both the backend and caddy users to it and give it access to the frontend build output.

The following snippets shows all commands you have to run.

# Create a shared group called "webdata"

sudo groupadd webdata

# groupadd: creates a new group

# webdata: the name of the group to create

# Add both users to the webdata group

sudo usermod -aG webdata backend

sudo usermod -aG webdata caddy

# usermod: modify a user account

# -a: append (add to group without removing from other groups)

# -G: specify supplementary group(s) to add the user to

# webdata: the group to add them to

# backend/caddy: the username to modify

# Change ownership of the client folder

sudo chown -R backend:webdata /home/backend/frontend/build/client

# chown: change file owner and group

# -R: recursive (apply to all files and subdirectories)

# backend:webdata: set owner to "backend" and group to "webdata"

# /home/...: the target directory

# Set permissions: owner full, group read+execute

sudo chmod -R 750 /home/backend/frontend/build/client

# chmod: change file mode/permissions

# -R: recursive

# 750: octal permission code

# 7 (owner): read(4) + write(2) + execute(1) = full access

# 5 (group): read(4) + execute(1) = can read and traverse

# 0 (others): no access

# Make new files inherit the group (setgid bit)

sudo chmod g+s /home/backend/frontend/build/client

# g+s: set the setgid bit on the directory

# This means new files/folders created inside will inherit

# the "webdata" group instead of the creator's primary group

# Ensure parent directories are traversable

chmod 755 /home/backend

chmod 755 /home/backend/frontend

chmod 755 /home/backend/frontend/build

# 755:

# 7 (owner): full access

# 5 (group): read + execute

# 5 (others): read + execute

# "execute" on a directory means permission to traverse/enter it

# Restart caddy to pick up the new group membership

sudo systemctl restart caddy

# systemctl: control the systemd service manager

# restart: stop and start the service

# caddy: the service name

# Log out and back in (or run `newgrp webdata`) for backend user to pick up the new group

# newgrp webdata: starts a new shell with webdata as the active group

Administration

Google Workspace

Google Workspace is used to manage e-mail.

In order to send e-mails from the backend, we use SMTP.

We use the following two Google Support articles.

https://web.archive.org/web/20250517200708/https://support.google.com/a/answer/176600?hl=en https://web.archive.org/web/20250508111359/https://support.google.com/a/answer/2956491

We choose an SMPT relay, currently called “SMTP voor e-mail versturen via code”, with TLS required, only allowed IP addresses and SMTP authentication disabled. SMTP authentication is disabled because IP addresses are used to authenticate, which means we don’t have to worry about app passwords or other complexity.

Currently, allowed IP addresses should only be home networks of .ComCom members that need to test it as well as the backend Hetzner server.

To send an email, all you need is to connect to smtp-relay.gmail.com:587 using SMPT (with TLS enabled) from an allowed IP address. Then, you can just send an email with SMTP.

In Python, using smtplib works perfectly. However, in some languages (like Go), it’s important to make sure the local address name is set to your actual IP address. This sometimes requires sending an EHLO request first.